AI is using more power than ever – what that means for you.

AI has exploded in the last couple of years, and it is power hungry! The surge in AI energy consumption is unlike anything the energy sector has ever seen. In fact, it shows up in the cost increase in both electricity and natural gas graphs. Natural gas is shown on the left side of the chart, electricity on the right, and both reveal the same problem in such a short amount of time: data center energy consumption is accelerating costs for everyone.

The Connection Between AI and Energy Costs

As you can see AI has exploded in popularity in just a few years. This surge is no longer a hidden cost. It is showing up directly in the rising cost of electricity and in the cost of natural gas, since natural gas is often used to generate electricity for these systems. From 2020 to 2025, natural gas prices increased by 7.5% annually, while electricity rose 11.1% over that same time period. Together, that’s an average increase of 9.3% per year, which is 37% higher than the historical average.

Crypto Mining and Energy Demands

Crypto mining originally didn’t take specialized equipment or use a lot of energy. Over time, the problem solving required to mine a coin has become more energy intensive and now requires specialized energy intensive servers. You can see after 2020 the more energy intensive crypto requirements along with more AI data centers now have a real effect on energy prices. This is expected to continue for the next 7-10 years.

This shift has compounded the overall data center energy consumption problem. Both crypto mining and AI workloads are stressing the power grid and contributing to the steady increase in energy costs.

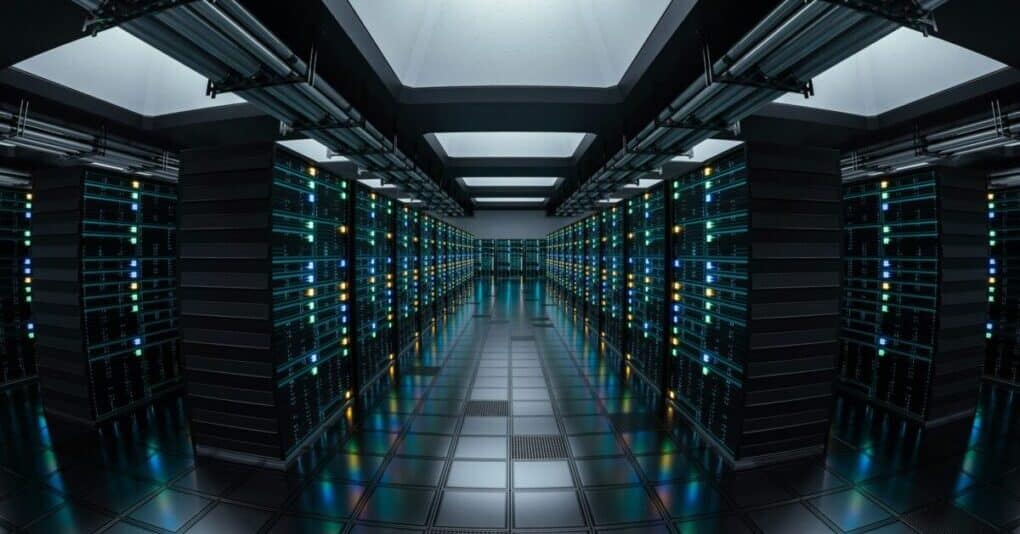

How Much Energy Does AI and Data Centers Use?

Each AI data center takes as much electricity as 250,000-500,000 residential homes. Crypto data centers energy requirements can vary greatly depending on size, but the average energy need is equivalent to 100,000-150,000 residential homes.

In fact, one single AI data center proposed for Cheyenne, Wyoming will outstrip all the energy produced in Wyoming with the data center using from 1.8 to 10 gigawatt hours of electricity per day. At the 10 giga watts hours per day rate the yearly power energy consumption would be 87.6 terrawatt hours. That would be double the 43.2 terrawatt hours of electricity currently produced in all of Wyoming. This is just for one AI data center.

Why Do Data Centers Need So Much Power?

The answer is straightforward: cooling and computing.

- Computing Power: Running thousands of high-performance servers simultaneously consumes massive amounts of electricity.

- Cooling Systems: Those servers produce heat, and cooling systems must work around the clock to prevent overheating.

- Redundancy: Most data centers build in backup power systems to avoid outages, which adds another layer of energy use.

See The Problem?

When you add together ai energy consumption and data center energy consumption, the ripple effects hit everyone, because as an energy consumer you are now competing with some very energy hungry data centers.

Infrastructure is also another hidden cost. Building the power lines, substations, and other equipment to support new data centers isn’t free. These expenses are spread across all ratepayers, meaning regular consumers like us share the financial burden of powering these data centers.

What’s Next?

The next article examines a case study home and the potential savings on gas and electric bills. We take a look at a Net Zero Ready Home (ready for solar panels) first because solar panels continue to improve and get cheaper over time. A Net Zero Ready home is no longer a significant investment. The ROI is now shorter than ever, with gas and electric bills rising 10% on average each year, offering immediate financial benefits to homeowners.